Traditional computer programs rely on rigid logic, yet the real world is full of ambiguity. The arrival of Large Language Models (LLMs) means that computer programs can now make “good enough” decisions, like humans can, by introducing a powerful new capability: judgment. Having judgment means that programs are no longer limited by what can be specified down to the level of numbers and logic. Judgment is what AI couldn’t make robust before LLMs. Practitioners could program in particular logical rules or build machine learning models to make particular judgments (such as credit worthiness), but these structures were never broad enough or dynamic enough for widespread and general use. These limitations meant that AI and machine learning were used in pieces, but most programming was still done in the traditional way, requiring an extreme precision that demanded an unnatural mode of thinking. LLMs are changing this entirely.

Judgment and Functions

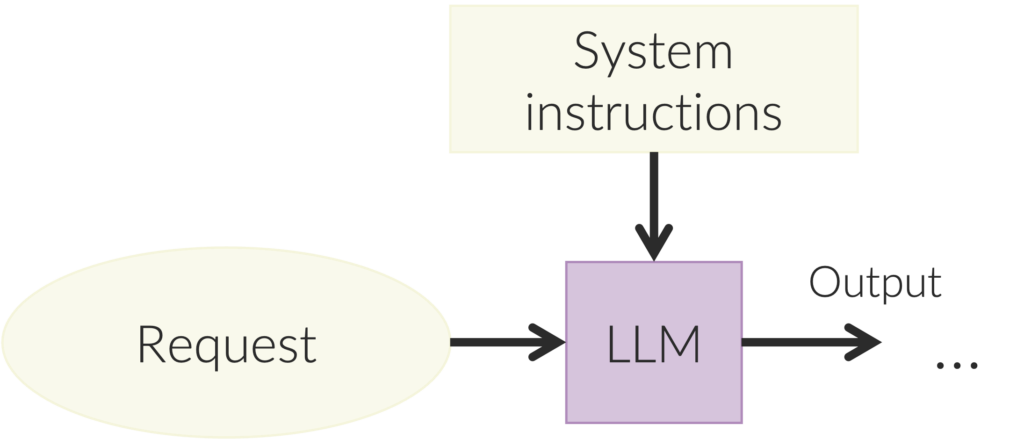

Most of us know LLMs through conversational tools like ChatGPT, but in programming, their true value lies in enabling judgment, not dialogue. Judgment allows programmers to create a new kind of flexible function, allowing computer systems to expand their scope beyond what can be rigidly defined with explicit criteria. A depiction of a judgment-enabled function is shown in the figure below. The request is the input to the function. The system instructions are what you want it to do, and the output is the flexible function output. The request and the system instructions together are often called the prompt.

For example, if the purpose of the function was to determine which account an expense should be billed to, the system instructions would consist of descriptions of different kinds of accounts and what expenses typically go to them. The system instructions are consistent across calls, but the request is different each time the function is called. The output of the function can be just about anything, such as a number, natural language text, or a structured document in JSON.

Judgment and Workflows

These judgment-enabled functions can be linked together into workflows, such as the one shown below. The LLMs can be used as nodes but also to make decisions about flows. For example, a workflow was implemented with Microsoft Copilot Studio to help the consulting firm McKinsey route inbound emails to the McKinsey person best able to handle them. The system read the inbound email and looked at relevant external information and then sent the email on to the best person.

These types of workflow are often called “agents,” but as Woody from Toy Story might say, “That’s not agents; that’s functions with judgment.” We will see later what a proper agent looks like. For now, we can think of this workflow as a graph. (Note that LangGraph provides a good set of abstractions for building these workflows if you are just getting started.)

Judgment from System Instructions

We see in the above figures that much of traditional programming is shifting to writing system instructions. Writing effective instructions requires having a mental model of how LLMs are trained. This mental model should consist of two parts: (1) LLMs interpolate what is on the internet, and (2) LLMs are trained to provide helpful and safe responses. For interpolation, LLMs draw on vast internet data to generate responses mimicking how people might reply online. So if you ask the LLM about planting fig trees in central Texas, it’s not going to think from first principles about climate and soil composition; it’s going to respond based on what people on the internet say. This limited reasoning means that if your LLM needs domain-specific knowledge that isn’t widely available on the internet you need to add examples to the instructions. LLMs are amazing at pattern matching and can process more detailed instructions than many humans have patience for, so you can be as precise and detailed as you want.

The second part of your mental model should be that LLMs are trained to provide helpful and safe responses. This second training means that the responses won’t be exactly like someone on the internet would say. Rather than the classic StackOverflow response that instead of answering your question smugly suggests that you redo your work using karate-lambda calculus, responses from LLMs are shaped to be direct and polite answers to questions. It also means that sometimes the LLM will refuse to answer if it thinks the request is dangerous. Different LLM providers offer different settings for this, but in general it helps to tell the LLM why it should respond, such as telling it what role it plays in society, “You are a medical assistant, please respond based on the following criteria …”

As LLMs begin to be used more in programs, your intellectual property will increasingly take the form of these instructions. We often hear about “prompt engineering,” but the term is misleading, suggesting clever tricks to manipulate LLMs rather than clear, precise instructions. In addition to understanding how LLMs are trained, crafting effective prompts requires clearly specifying the task and necessary information. The instructions will often include documentation that was originally written for people to follow, and going forward, we will increasingly find ourselves writing documentation directly for LLMs. This documentation specifies how the system should work, and the system is then “rendered” into existence by the LLMs. In my work, I often find that if the LLM doesn’t do what I want it’s because I haven’t finished thinking yet. The process of rendering the design shows where my design is incomplete.

The judgments provided by LLMs are currently not as good as those of a conscientious human, but they are often better than a distracted human. More significantly, the kinds of mistakes that LLMs make are different than those a human would, so workflow designs need to take unusual failure modes into account. However, one way to improve the judgments of LLMs is by making sure that they have enough context to answer the question.

Judgment with Context

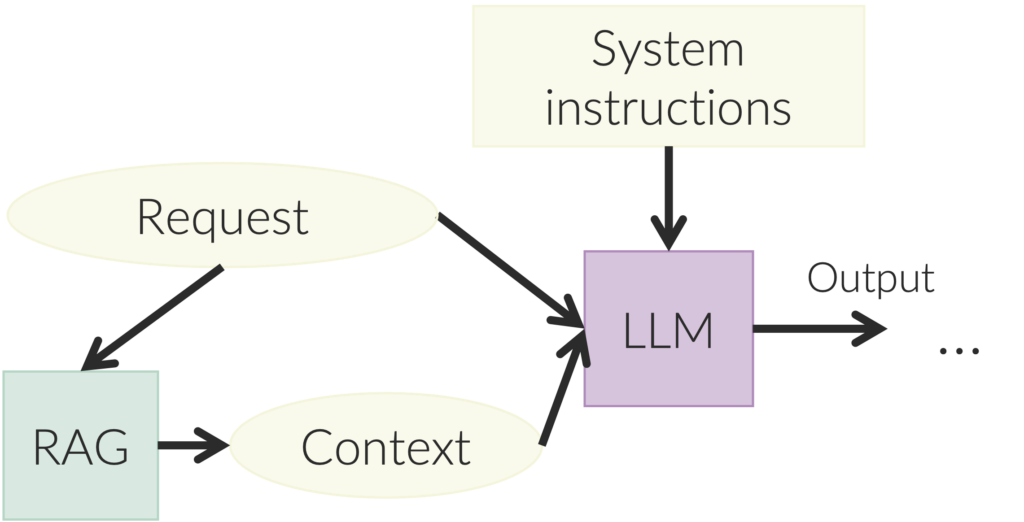

LLMs can make better judgments when they have sufficient context. For example, an LLM acting as a law assistant may need to look up details about the law related to the particular case in the request. These details serve as context for the request and can then be passed to the LLM along with the request. One common way to collect the right context for a request is called retrieval augmented generation (RAG). You’ve probably heard about RAG. When a request comes in, a process converts the request to a vector and then compares that vector against the vectors of chunks of text in a database. The chunks of text with the most similar vectors to the request are then added to the context.

A context can be any kind of information, such as computer code, and LLMs are now reading our code and acting as assistants to programmers. As these assistants get better, they have incorporated more context. Recently, we have seen a progression of four levels of programming assistant:

- They started as just a smart autocomplete that only looked at the current file.

- They then began offering suggestions for code fixes based on requests by looking at the current file and maybe a few others.

- They then progressed to actually changing the code based on requests (e.g., so called “agent mode” in Github Copilot).

- In their latest incarnation, they can look at the whole codebase as context and make general changes (such as with Claude Code and the new OpenAI Codex).

Funnily enough, extra-compiler semantics such as comments and good variable names used to be important for us humans but not for the computer running the code, but with LLM assistants we now have computers also reading the code, and those good practices do matter for these computers because they make the context more useful by enabling the LLM to map it to what it learned during training.

Judgment and Calling External Tools

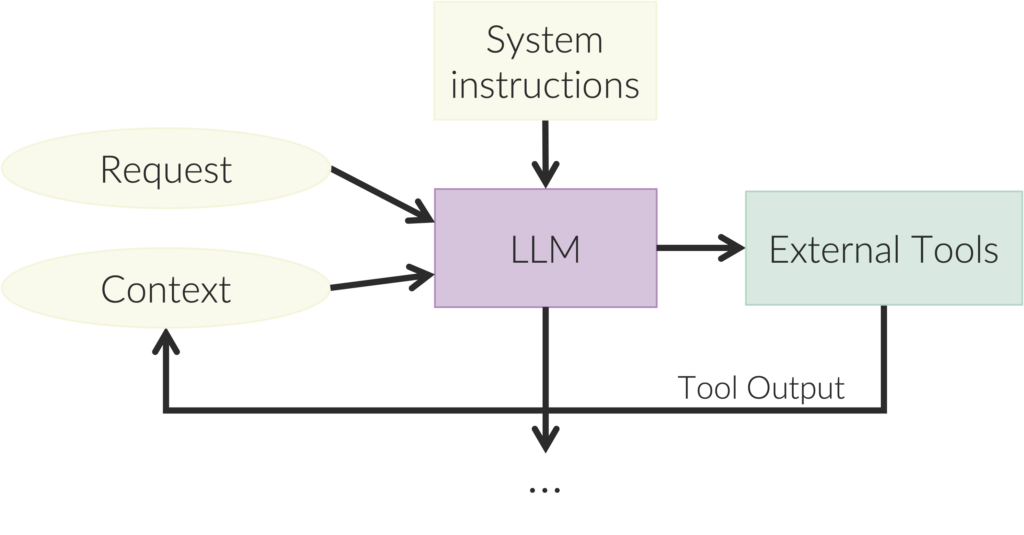

LLMs are surprisingly human-like in their weaknesses in that they can’t keep track of complex state and how that complex state will unfold. We humans are good at getting the gist, but for specifics, we need tools, such as databases. Similar to how humans use calendars, calculators, and computers, LLMs can call external tools. These tools can be a web search, or a function call of regular code, or a database lookup. As we see below, just like with RAG, these tools add to the context to help the LLM better respond to the request. The difference from RAG is that the LLM chooses which tool to call, and it continues to call tools until it has sufficient context to answer the request, a pattern sometimes called ReAct.

Tool calling in this way is like a robot sensing and acting in the world. Calling tools is where the system gets information. It can choose which tool to call, like a human choosing where to look as we enter a new room. Tools can also be considered “actions” if they change the external world, such as by adding a record to a database. This pattern is getting us toward agency.

Judgment and Agents

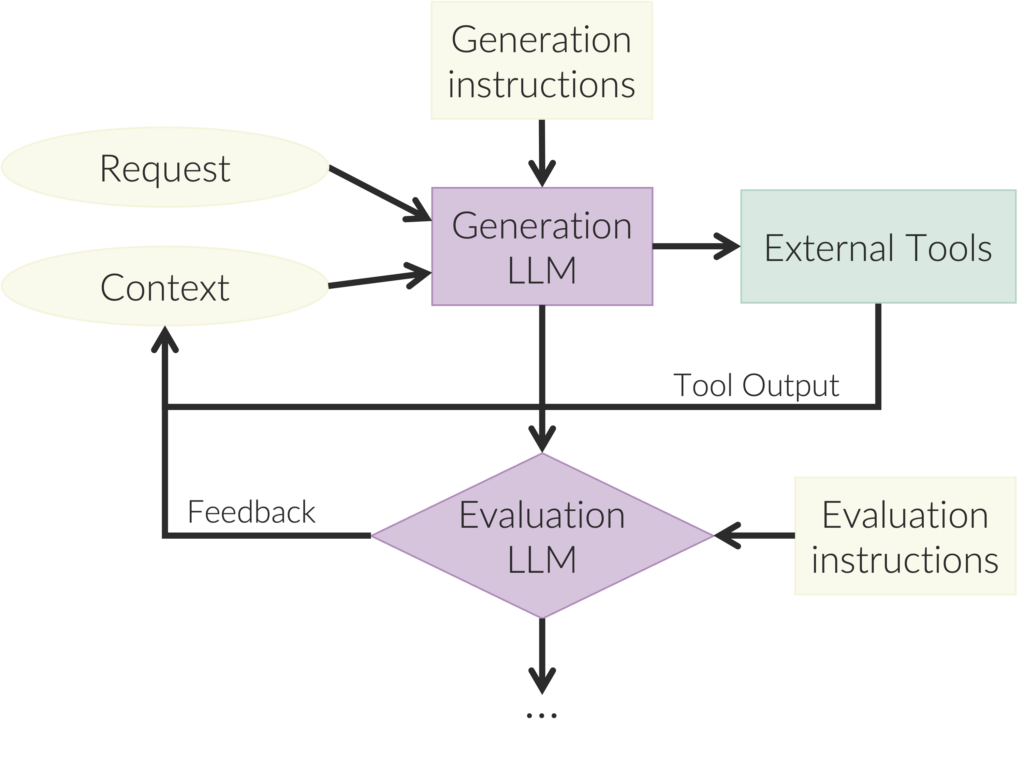

An agent is an entity capable of making choices and reflecting on the quality of those choices. This second-level reflection is necessary for a recognition of a “self” that is separate from the outside world. To achieve full agency, we combine a Generation LLM, which uses judgment to produce outputs, with an Evaluation LLM, which uses judgment to assess those outputs, as shown below. Together, these two LLMs form a self-monitoring system in which the Evaluation LLM provides feedback to continually improve the Generation LLM.

These dual judgments enable an agent to search through a space of solutions to a problem. Search is an old approach in AI where the system is in some state, it evaluates a set of actions, and each action takes it to a new state. The system can then explore these states until it finds a state that is a good solution to the problem. There are two challenges with applying search to interesting problems. The first is that the space of possible states is often too big to exhaustively explore. Judgment addresses this first challenge by enabling the agent to smartly choose which action to try next. The second challenge to applying search is that you need some way of knowing whether you are making progress toward the solution and whether the current state is a good-enough solution to the problem. Judgment also addresses this challenge by providing those signals. Search is closely related to another venerable AI method called generate and test, where the generate function creates candidates and the test function determines the value of those candidates, and both of those processes benefit from judgment.

For an example of search (or generate and test), imagine that the LLM system is designing a new drug. The Generation LLM needs to propose good candidates or changes to existing candidates, and the Evaluation LLM needs to estimate whether such a candidate might work.

Judgment and Independent Code Generation

Computer code is a special kind of thing because it is instructions and so can create anything. It is the design of a machine that is made tangible when it is executed by a computer.

This agent-based search process described above can independently generate computer code. At its simplest, one of the external tools provided to the LLM can be a code interpreter in a sandbox that can run the generated code and provide the output to the context. For example, if the user wants to know the number of days until the winter solstice, the LLM can generate a program that computes it and can pass that program to the program interpreter tool. The interpreter can then run the program and pass the output, the computed number of days, to the context. The LLM then reads this context and determines it has the answer and passes it to the user. Computer generated code like this is interesting because we are used to viewing computer programs as important artifacts that need to be understood and maintained, like contracts between us and the systems. But with LLMs generating code on the fly, a lot of code may become more akin to spoken language than written documents. The code is generated and run when needed (spoken) and then discarded, as the work and conversation move on.

More sophisticated code generation entails the Generation LLM writing the code and the Evaluation LLM determining how to improve the code. This Evaluation LLM can be paired with a verifiable evaluation when one is available, such as whether the code solves a math problem or how fast it can solve it. If multiple candidates are generated and mixed and improved, code generation can be akin to Darwinian evolution, such as with the recent methods of AlphaEvolve and Darwin Gödel Machines. This generated code can have any purpose, such as maximizing some mathematical function or creating a design for a spaceship. The judgment provided by the Evaluation LLM is particularly important when there is no concrete evaluation function and solutions must be judged based on fuzzy criteria.

Code generation can be also used to build new external tools available to the agent, so that the system can expand itself. One example is the Voyager system that builds tools made of code to help it play Minecraft. A system that can extend itself represents a significant advance for machine learning. Neural networks such as LLMs are trained with millions of examples, with each example modifying the model parameters ever-so slightly. This process is too slow. The future lies with autonomous systems that can write code that makes them smarter in leaps and bounds. This self-generation is a kind of autopoiesis, which is the process of building one’s own parts, like a biological system.

Judgment and Life and Consciousness

As more code becomes generated by AI, we may see some computer systems become incomprehensible, so that understanding these systems will be like trying to disentangle what is happening inside a biological cell. This transition from humans writing code to computer systems writing their own code represents a fundamental turning point. Computer code has always been blindly executed by CPUs who are incapable of making judgments about that code to prevent things such as buffer overflows, SQL injection, and other forms of blind stupidity. But a computer system capable of reading and writing its own code marks the transition from a passive automaton to an active participant. This ability to read their internal programming means that computers can perform introspection and can look multiple levels down into their own actions and motivations. For the first time, computers can run processes but not be entirely in those processes. They can use internal attention to focus on different aspects of themselves, generating a kind of strange loop that might someday lead to consciousness.

Thanks to Courtney Sprouse and Kyle Ferguson for their helpful comments and suggestions.