Large language models (LLMs) such as ChatGPT are surprisingly good, but they can’t expand human knowledge because they only interpolate existing information already on the internet. To create new knowledge and solve problems that no human has ever solved, AI needs to be adaptively embodied and be able to build causal models of the world. Embodiment entails that the AI has purpose, which enables it to be more flexible than stimulus-response agents, such as LLMs, by searching for ways to achieve its goals. Adaptive embodiment means that the AI can build meaning by learning representations and modifying how it maps sensory input to those representations. This learning and mapping gives the AI additional sensitivity to the environment. The final piece of embodiment is that the AI requires an executive system—a kind of proto-consciousness—that enables self-correction at various levels of operation. In addition to adaptive embodiment, the AI must be able to build causal models that enable it to know what to change to achieve its goals and to predict the future by simulating internal states forward.

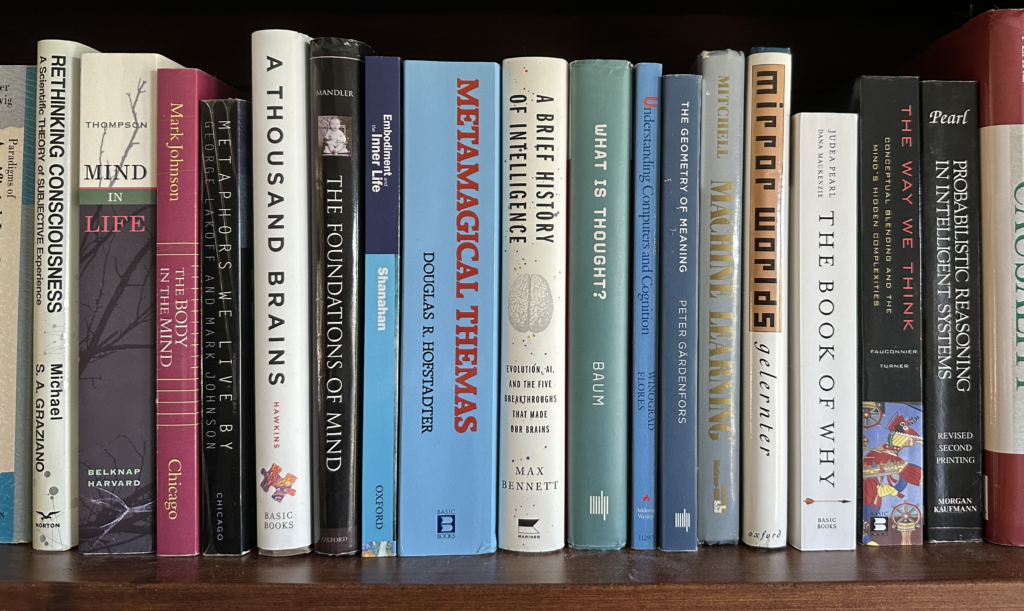

I’ve read dozens of books since I started working in AI in 2002, and these are the ones that stand out and influenced my thinking. This list focuses on modern books, where I define “modern” as beginning in 1980, after the period of disembodied logic and search, when researchers realized that artificial intelligence needs to be embodied to achieve purpose and meaning. These 30 books presented in chronological order over the last 44 years each gets at a piece of the puzzle for what it will take to move beyond LLMs to expand the capabilities of AI.

Metaphors We Live By (1980) by George Lakoff and Mark Johnson

Modeling human thought through formal methods such as first-order logic seems like a natural choice, but these systems always fall short of capturing the richness of human cognition. Lakoff and Johnson argue for the necessity of less objective representations, proposing that much of human thought relies on understanding abstract concepts through metaphors rooted in lived experience. This metaphorical thinking enables us to grasp abstract concepts like “love” by relating them to more familiar ideas, such as a journey or a war (depending on your relationship). As another example, consider what an “argument” is. An argument can be understood as a container. You can say that someone’s argument doesn’t hold water, you can say that it is empty, or you can say that the argument has holes in it. You can also understand an argument as a building, having either a solid or a poor foundation. These metaphors are much more sophisticated than predicates or rules, and to understand language and abstract concepts, computers must map them to physical or simulated lived experience.

Metamagical Themas: Questing for the Essence of Mind and Pattern (1985) by Douglas Hofstadter

Hoftstader argues that it is impossible to build one model of the world and have it be complete, even for simple phenomena. For example, the shape of the letter “A” in a font can require an unbounded number of levers and knobs to model, with the serifs and where you put the bar across, and on and on. The consequence of this realization is that systems must be able to build their own formal representations on the fly for each new situation. The book is a collection of individual essays, and you don’t have to read them all. I find his writing style slightly off-putting for a reason I can’t quite put my finger on, but there is no denying that he is right.

Understanding Computers and Cognition: A New Foundation for Design (1986) by Terry Winograd and Fernando Flores

Winograd and Flores use the term autopoiesis (coined by Maturana and Varelato in 1972) to describe the process by which a system builds itself by continually constructing what it needs to maintain itself and adapt to new situations. Systems that exhibit autopoiesis are embodied in an environment and their decisions are guided by thriving there. In an embodied agent, the agent doesn’t directly know the environment; it only perceives a small part, and so the outside world can influence the agent’s internal state but not determine it. For example, the frog doesn’t see a fly; it instead only has a corresponding internal perception. The book also discusses conscious control. As an agent lives in a situation, it follows simple behavior patterns that only require attention when things break down. The book invokes Heidegger and states that when you are hammering the hammer does not exist: it only pops into existence when there is a breakdown and something doesn’t work, such as the head falling off. (Or for us computer people, the mouse doesn’t exist until it stops working.)

The Society of Mind (1986) by Marvin Minsky

Marvin Minsky argued that the world can be understood through frames. A frame is a set of questions to be asked about a hypothetical situation, such as a kid’s birthday party, which consists of an entertainment, a source of sugar, and opening presents. The frame of the birthday party sets up the representation for how to talk about it and understand any particular party. This book is a joy to read because it is broken up into many small chapters. He discusses frames, but it mostly focuses on the mind as a society of agents, which is particularly relevant right now because specialized agents are one of the most common ways to deploy LLMs using tools such as LangChain.

The Body in the Mind: The Bodily Basis of Meaning, Imagination, and Reason (1987) by Mark Johnson

This book builds on the metaphorical reasoning of Metaphors We Live By and dives into the patterns that we use to map experience to meaning. Through evolution, our brains developed specific patterns of thought for various situations, with some patterns proving so broadly useful that they are reused across different contexts. Mark Johnson describes those patterns of thought and refers to them as image schemas. The image schemas are lower-level and underpin Minsky’s frames. Frames are specific to cultures, while image schemas are general across cultures. Examples of image schemas include path, force, counterforce, restraint, removal, enablement, attraction, link, cycle, near-far, scale, part-whole, full-empty, matching, surface, object, and collection. Meaningful understanding begins with image schemas.

Mirror Worlds: The Day Software Puts the Universe in a Shoebox … How It Will Happen and What It Will Mean (1991) by David Gelernter

A mirror world is a copy of the physical world in software, a simulation of a system that is continually updated with real-world data. The main example used in this book is a hospital. With a mirror version of the hospital, you can try out new policies and observe their effects. This mirror-world concept has since evolved into what is called a digital twin, which is often a digital version of a machine that can be tested. It also underpins work in simulated environments for robot learning, such as the tool by NVIDIA called Isaac Sim. Simulation is a crucial piece to the puzzle, but to build smart AI, we need systems that can generate their own situation to simulate. As we saw with Metamagical Themas, since situations are unbounded in complexity, simulations need to be autonomously customized to answer the question at hand.

Machine Learning (1997) by Tom Mitchell

Tom Mitchell addresses one of the most overlooked fundamentals in machine learning: the concept of a hypothesis space. A hypothesis space is the space of all models that a machine learning algorithm can choose from. For example, in linear regression with y = mx + b, the hypothesis space is the set of all possible values of m and b. This idea is more significant than people realize because if you can build the right hypothesis space consisting of high-level meanings, such as image schemas, you can do much more powerful learning than you can with a larger, less expressive, space, such as 50 billion parameters in a transformer model. This book is unusual on this list for two reasons: 1. It is the only textbook, and 2. It is only one of two books from the 1990s (they were a dark time). It’s on the list in spite of being a textbook because it is so clear and compelling that you can just sit down in an armchair and read it. It covers the timeless fundamentals of the field, and you can get a PDF copy for free here.

The Way We Think: Conceptual Blending And The Mind’s Hidden Complexities (2003) by Gilles Fauconnier and Mark Turner

Fauconnier and Turner argue that 50,000 years ago humans gained the ability to think flexibly, enabling language and culture to evolve together, which ultimately led to our technological explosion. They argue that this flexible thinking originated from the ability to blend different cognitive frames together. To understand blending, consider the cartoon character Puss in Boots. The humor of the movies comes from the character blending the characteristics of both a human and a cat. Flexible thinking comes from compositionality, and blending is an important way to compose.

What Is Thought? (2003) by Eric Baum

What Is Thought? highlights how structured representations such as image schemas and frames can hold meaning. Baum argues that compression of patterns turns syntax into semantics. Consider again using the linear model y = mx + b to fit a set of data y=f(x) that varies along a feature x. This is called curve fitting. If it fits well, the model is a condensed representation of the process that generates those points. If the condensed model comes from a relatively small hypothesis space, it will likely generalize to unseen points because since there are relatively few models in the hypothesis space, there aren’t enough to find one that overfits the training points. Therefore, if you find a model that works from this limited space, it is likely representing the generative process that made the points, and therefore the model encodes meaning. As we will see in The Case Against Reality, this model may not actually represent reality, but we will also see in The Beginning of Infinity that models serve as explanations, and explanations are powerful.

The Foundations of Mind, Origins of Conceptual Thought (2004) by Jean Mandler

Mandler describes how meaning arises in the human mind. She focuses on how humans use image schemas from the viewpoint of developmental psychology. She describes a process of “perceptual meaning analysis” whereby the brain converts perception into a usable structural form. You can think of this book as a modern version and extension of the work done by Jean Piaget.

On Intelligence: How a New Understanding of the Brain Will Lead to the Creation of Truly Intelligent Machines (2005) by Jeff Hawkins

Hawkins begins with a lucid explanation of the brain and the neocortex. He describes the neocortex as having six layers and as being about the thickness of six business cards. He argues that the cortical column is the right level of abstraction to think about the brain from an AI perspective. And he argues that the neocortex (cortex for short) only runs one algorithm to do all of its diverse processing. He points out that once light and sound enter the brain, the processing is similar, just neurons firing.

He divides the brain into the old brain and the cortex. He describes how the old brain regulates breathing and makes you run in fear. Reptiles only have the old brain—they have this set of 8 or 9 behaviors that they do. The neocortex is a prediction mechanism that sits on top of the old brain. Initially, it allowed the organism to execute its core behaviors better, but as it expanded it allowed for new behaviors. Humans have more connections between their premotor cortex and movement than other animals, that is why we have such fine movements.

For Hawkins, learning is embodied and comes through predictions. He focuses on the importance of sequences in learning. For example, he discusses how our sense of touch isn’t very useful if we don’t move our hand around as we touch something. He also argues that the brain uses memory more than computation because our neurons are slow, and so computation can’t take many levels. Memories are faster, and these memories are used for predictions. As we walk to our front door, we predict how it will look, and when we reach out for the doorknob we predict where it will be and how it will feel, and if something doesn’t match our prediction it is immediately brought to our attention (reminiscent of Winograd and Flores). He also describes our vision as being based on prediction. Consider a picture of a log on the ground; if you take that photograph and blow it up you see that there is no clear line where the log ends and the ground begins; when we look at that log we create the line.

Stumbling on Happiness (2007) by Daniel Gilbert

It sounds like a self-help book, but it isn’t. The key insight is how we simulate the world. Our mental simulations feel complete, but they are not. Imagine your favorite car. Got it? Okay, what does the bumper look like? I don’t know, and you might not know what yours looks like either. Simulation of the world is a key piece needed for AI, but this simulation can’t cover everything, and Gilbert discusses how humans do it.

I Am a Strange Loop (2007) by Douglas Hofstadter

The strangeness of self-reference comes up a lot. Consider the old barber who cuts everyone’s hair who doesn’t cut their own. Does he cut his own hair? If he does, he doesn’t. And if he doesn’t, he does. Self-reference is at the heart of the halting problem and Gödel’s incompleteness theorem. The escape, of course, is that we need a process at one level watching a separate process at the level below. You can’t smash everything into one level. This lack of meta-control is evident when using LLMs. I once had a problem where ChatGPT couldn’t answer questions about calculus. Digging, I found that it could generate the correct Python code, but for some reason it couldn’t run it. ChatGPT didn’t understand this because there was no process watching the process that could have seen where the breakdown was occurring. Hofstadter argues that consciousness could be the result of many such loops of processes watching lower ones, and such a breakdown would be the kind of breakdown that would trigger consciousness to intervene, just as having the head fly off your hammer would make you suddenly aware that you were wielding the tool.

The Philosophical Baby: What Children’s Minds Tell Us About Truth, Love, and the Meaning of Life (2009) by Alison Gopnik

We’ve seen how consciousness serves as an executive and unifier of thought when there is a breakdown. Because adults have more experience than children (and therefore fewer breakdowns), the psychologist Alison Gopnik makes the interesting claim that children are more conscious than adults. That is why it takes them so long to put on their shoes. She also describes how babies and children make causal theories as they interact with the world. Children’s exploration is broader than that of adults, they inhibit fewer actions, and they pay attention to more things. She also describes how consciousness is different for children than for adults. When young, they don’t have an inner monologue, and their consciousness is much more outwardly focused.

Supersizing the Mind: Embodiment, Action, and Cognitive Extension (2010) by Andy Clark

My friends thought it was funny that I would turn my couch upside down when there was something I needed to remember to do later. Clark’s extended mind hypothesis is that the external world is part of our cognition. A significant part of cognition is retrieving information for the task, and that information can be stored in our heads or outside in the world, such as with notes. These computations can happen in loops, such as when we execute the multiple steps of long division with pencil and paper. Clark’s extended mind hypothesis takes on even greater importance as LLMs are beginning to use API calls to other tools to help answer questions. As those API calls spawn their own calls, it may become increasingly nebulous where an intelligent agent begins and ends.

Embodiment and the Inner Life: Cognition and Consciousness in the Space of Possible Minds (2010) by Murray Shanahan

Building on this idea of a breakdown leading to conscious thought, Murray Shanahan explains consciousness as a process that happens when the world breaks our expectations, similar to the discussion in Understanding Computers and Cognition. Lower animals are behavior based; they jump from one behavior to the next. Consciousness is the ability to take competing pieces of those behaviors and recombine them into something new. This recombining happens in a global workspace. Shanahan discusses how consciousness comes from a web in the brain and that parts fight for the right to control that web. It is a dynamical system. Processes jump into the system and try to create coalitions, with the parts being reminiscent of The Society of Mind. Those coalitions that win make it into consciousness.

I’m always surprised at how my consciousness seemingly drops in and out. One time, I was driving and trying to decide if I should get tacos, and the next thing I knew I was in line at Tacodeli. Another time I could feel that my teeth were clean and I could tell that my toothbrush was wet, but I had no memory of the brushing. If you pay attention, you’ll start to notice that your externally focused consciousness isn’t as continuous as it feels.

Mind in Life: Biology, Phenomenology, and the Sciences of Mind (2010) by Evan Thompson

Mind in Life covers embodiment and autopoiesis from a philosophical perspective. Philosophers make me angry because they tend to use jargon only known to other philosophers, and so it is hard to know if they are saying anything useful. But this book made good sense on the second reading. It discusses how embodied agents do not have models of the external world. Instead, they have representations that allow them to act in the world.

How the Mind Works (2011) by Steven Pinker

Pinker describes human cognition from a perspective that straddles psychology and linguistics. Harking back to Metaphors We Live By, the book discusses how there are two fundamental metaphors in language: 1. location in space, and 2. force, agency, and causation.

Steven Pinker is probably my favorite non-fiction author. In this book, he goes into depth on many aspects of human intelligence. He describes how humans view artifacts from a design perspective, so if you turn a coffee pot into a bird feeder, it is a bird feeder. Humans view plants and animals from an essence perspective, so if you put a lion mane on a tiger, it is still a tiger. And humans view animal behavior from an agent perspective. Animal actions (including humans) are governed by goals and beliefs.

He also describes how our brains are not wired for abstract thought—we have to learn that in school. This is evidence that formal approaches such as logic or OWL are insufficient. These kinds of inferences that these systems are built for are not how humans think. Human thinking is less exact but more flexible, and our knowledge is not internally consistent.

And Pinker explains human kinship relationships and romantic love in starkly plain words. “Love” is an emotional binding contract that both people in a relationship can rely on to keep them together, even if one partner comes across a better option. I wonder what 16th-century Spanish poets would think of that.

The Beginning of Infinity: Explanations That Transform the World (2011) by David Deutsch

AI that can extend human knowledge will do so by building causal models. I consider causal models to be synonymous with explanations, and it’s easy to forget how powerful they are. When we look up at the night sky, we see stars; but the “stars” are in our head, and what we actually see is just points of light. The idea of a “star” as an enormous ball of burning gas millions of miles away came to humanity through centuries of work. David Deutsch dives into what makes good explanations. For example, one hallmark of good explanations is that no part can be altered and still explain the phenomena because each piece plays a key causal part.

Louder Than Words: The New Science of How the Mind Makes Meaning (2012) by Benjamin K. Bergen

Our internal representations of the world “mean” something because they are tied to a web of actions and expected outcomes. This book does a great job of describing how knowledge has to be embodied to help an agent interact with the world. Benjamin Bergen outlines how we activate the relevant parts of the brain’s motor system when we understand action words. Consider the example of someone saying that she was bitten while feeding a monkey. Most of us have never fed a monkey, but we all know where she was bitten. (I can’t remember who came up with this example. If you do, please let me know so I can credit it.)

A User’s Guide to Thought and Meaning (2012) by Ray Jackendoff

Ray Jackendoff describes how humans map sensation to meaning. The sensation in his theory takes the form of tokens. While tokens have taken on significantly more importance since the rise of large language models (LLMs), Jackenodoff’s tokens are more generalized than LLM tokens—they are instances of classes, like a particular chair. Jackendoff discusses how these tokens get mapped to a deeper conceptual structure through a process of judgment. This mapping process highlights how LLMs are insufficient because they have little or no internal structure to map to.

The Geometry of Meaning: Semantics Based on Conceptual Spaces (2014) by Peter Gärdenfors

Peter Gärdenfors discusses the nuts and bolts of language understanding, beginning with how conversation is based on shared reference. The first step is to physically point to objects in the world so that we and our conversational partner know that we are referencing the same things. Before children can speak, they use this pointing to convey desire for objects and for shared interaction. This process is a springboard, because as we learn language, words are able to point to shared ideas in our minds.

My favorite part is his description of how we negotiate meaning in the course of a conversation. A coordination of meaning is required when two people have two different ways to describe concepts. And a coordination of inner worlds is required when one person has a concept not shared by the other. LLM-based systems currently do a poor job of learning during a conversation and using what they have learned in future conversations with you.

The Book of Why: The New Science of Cause and Effect (2018) by Judea Pearl

Causal models are required for general intelligence, and Pearl is the authority on causality in AI. I dislike the phrase “correlation does not imply causation” because while it is true, correlation does tell you where to start looking. Pearl’s book explains how to tease out the difference and to go from correlation, which doesn’t enable an agent to control its environment, to causal models, which do. If you like the story told in this book and want to go deeper, you can then move on to his other books Causality and Probabilistic Reasoning in Intelligent Systems.

Rebooting AI: Building Artificial Intelligence We Can Trust (2019) by Gary Marcus and Ernest Davis

I consider LLMs to be a wonderful surprise because I never thought we could get this far just by predicting the next word, but LLMs are not sufficient for full intelligence, and Marcus and Davis explain why. Consider one of their examples: Person A says they found a wallet, and person B immediately checks their back pocket. Why did person B do that? Marcus and Davis argue that what is needed is AI that uses reusable and composable abstractions to model its environment.

Rethinking Consciousness (2019) by Michael S. A. Graziano

Graziano argues that the key to consciousness is attention. Overt attention is looking at something out in the world, but covert attention is paying attention to something you are not looking at. He says that covert attention is the key to consciousness. Since you can attend to internal things, you need a model of that attention. This creates consciousness.

The Case Against Reality: Why Evolution Hid the Truth from Our Eyes (2019) by Donald Hoffman

Donald Hoffman argues that evolution endowed us with the ability to perceive the world to survive but not necessarily to know how the world actually is. He uses the analogy of an icon on your desktop. It takes you to the program, but it doesn’t represent what is happening underneath. He also uses apples as an example. When you hold an apple, there is no apple in your hand. The apple is in your brain. There is physical stuff out there, but we have no way of knowing what it is really. We only know that our perception of the apple is good enough to survive. This idea that we have representations to survive but not objective models of the world ties back to Mind in Life by Thompson and even back to Plato with his allegory of the cave.

A Thousand Brains: A New Theory of Intelligence (2021) by Jeff Hawkins

Like his other book, this book also provides a clear and understandable discussion of the brain. Jeff Hawkins views intelligence in terms of sequences of states and grids of states related together. For example, the handle of a coffee mug has a spatial relationship to the bottom that is consistent as the mug moves around.

Journey of the Mind (2022) by Ogi Ogas and Sai Gaddam (first half)

They put forth a compelling biological progression of how intelligence could have evolved. The book takes us step-by-step from life’s inception to smart animals. It describes the formation of the first effector and the first sensor. My favorite part is when Ogas and Gaddam describe how purpose came into being on our planet when, by chance, the first sensor element connected to the first effector. You can read just the first half; I didn’t enjoy the second half as much.

The World Behind the World: Consciousness, Free Will, and the Limits of Science (2023) by Erik Hoel

This book starts as an insider exposé on the limitations of neuroscience research results. It then pivots to philosophical themes such as the zombie hypothesis: whether it is possible to live as we do without any kind of conscious experience. But my favorite part is the last quarter where he talks about finding causal models at different levels of abstraction. Using the principles of error correction, Hoel shows that there are causal relationships that are easier to identify at higher levels of abstraction than at lower levels. By trading off with information loss, it is possible to find the best level of abstraction. Erik Hoel also has related work on dreams being low-fidelity abstractions. Because of this low fidelity, the movie Dream Scenario, where a guy becomes famous by appearing in everyone’s dreams, couldn’t happen. Dreams are too low fidelity to register a new face that we would recognize in real life.

A Brief History of Intelligence (2024) by Max Bennett

Simulating the world forward is likely the key to general intelligence. This book beautifully explains intelligence from its biological origins, and it describes how analogs of different AI algorithms might be running in the brain, ultimately leading to these simulations. The explanations are undoubtedly simplified, but an incomplete scaffolding is always a good place to start.

Conclusion

The next-word prediction method of LLMs will not get us to real artificial intelligence. It’s possible that we can design and train a new neural architecture in an end-to-end fashion that does get us there, but since we don’t have evolutionary time, energy, or computation, it is more likely that we will need to implement the ideas in these books.

The answer that emerges from the books in this list is that for an AI to understand the world and invent new things it must be able to take the current situation and put it on an internal mental stage. It should then simulate that situation forward to predict the future. That simulation should run at the level of abstraction appropriate to the question it wants to answer. To aquire the knowledge necessary to build the stage and run the simulation, it should be able to read the internet and convert it to its own knowledge format (not just to predict the next token) and be able to act in a real or simulated environment to obtain a grounded understanding and run experiments. When real AI emerges, it will likely take the form of a hybrid system that uses neural networks to make subtle decisions in perception and action and to dynamically build the appropriate symbolic representation for each task. I don’t know how long this AI will take to build, but hopefully it won’t be another 44 years.

Thanks to Noah Mugan for helpful comments and suggestions.