We want to know the present so that we can predict the future. When the ability to predict exceeds inferences that can be made with commonly accessible information, we call it precognition. The idea of precognition was made salient by the 2002 movie Minority Report. In that movie, there is a scene where one of the oracles leads the protagonist through a shopping mall and evades pursuers by knowing exactly where each pursuer will be and in which direction that pursuer will gaze. The oracles in Minority Report could even predict future crimes based on perfect knowledge of the present and the assumption of a deterministic world.

We regular humans cannot know the physical world in this way, but we can do something close in the mirror world. The idea of a mirror world was first proposed by David Gelernter [1]. A mirror world consists of a digital representation of every object and event in the physical world. We now find our lives increasingly reflected in this world, as many of our activities now have a mirror representation in digital form. Photographs are now online images instead of physical objects stored in closets, old-fashioned soapbox rants are now blog posts, conversations are now tweets, and transaction ledgers are now database tables. Now that these objects and events are in digital form, they can be processed by a computer, and we can bring to bear all of the progress in artificial intelligence and machine learning to use that data about the present to predict the future.

The challenge is that mirror-world data is often unstructured and comes with high volume, velocity, and variety [2], and therefore we must distill it down to actionable information. Consider the example of social media. Social media, such as Twitter and Facebook, serves as a real-time window on the events, thoughts, and communications of distance areas. Social media is particularly valuable for time-sensitive events, such as those that occur in a natural disaster. During the recent Hurricane Sandy, first responders needed to know where the electricity was out, if and where there was looting, and where people needed the most help. The most timely information about these events came from people directly observing and recording them with their mobile phones. But, while the information was all there, it was buried in an unholy amount of useless Twitter chatter—a problem that can be compounded when people deliberately post false information and rumors. How can first responders find structure in a mess of social media data to extract a manageable set of useful insights?

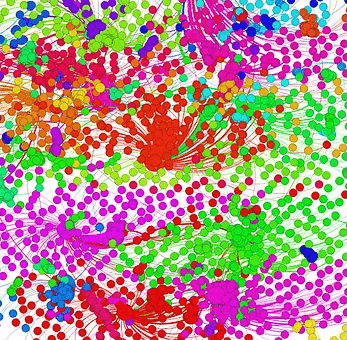

The structure needed to make sense of Big Data will be different in each domain. In social media, one bit of structure that can be employed is to use machine learning to identify groups of individuals that communicate consistently over time. Knowledge of these groups can provide first responders with the ability to identify the central actors and their associated members. Some groups will not be reporting about the event, or will be known to report it unreliably, and the data from those members can be ignored, leaving the analyst with the reliable and relevant data from which it is easier to draw conclusions.

The goal for data researches is to create useful structures to understand each important domain, but there are two challenges that are common across domains. The first challenge is that the data is about people, and we must devise methods that allow users of the data to help people while simultaneously ensuring that they, or the next entitythat receives the data, cannot use the data to do harm. The second challenge is that it is difficult to judge the reliability of machine learning algorithms on Big Data. For the

Hurricane Sandy example, how do you know that the algorithm is really learning the right groups? Or, consider the case of using social media to identify important events. How do you identify a set of ground-truth events so that you can measure reliability? Watch CNN? The whole point of using social media is to go beyond the insights that can be gained by watching cable news. The mirror world is becoming as rich as the physical one, and determining the ground truth for evaluating learning algorithms can be as complex as solving the problem directly.

The streaming nature of Big Data also requires that we adjust our thinking. The mirror world changes as fast as the physical one, so it cannot be represented with a static dataset. Indeed, the very idea of a Big Data dataset is an oxymoron. Big Data is a stream, and you have to know where to point your collection device, because you can’t store it all. The speed at which the data goes by also means that it can be computationally infeasible to do complex math, and researchers should instead focus on getting the right data so that inference is easy. The good news with Big Data is that you don’t have to be 100% accurate, because even a small improvement over a large phenomenon can make a big difference.

ABOUT JONATHAN